Stop LLM failures from reaching prod.

PromptProof runs in CI to catch hallucinations, prompt regressions, and unsafe outputs — before merge. Deterministic checks, regression compare, and cost budgets. No live model calls.

How it works

Three simple steps to bulletproof your LLM outputs

Define expectations

Write simple rules/tests for your model outputs (JSON schema, regex, custom checks).

# .promptproof.yml

tests:

- name: no-hallucination

grounding:

method: semantic_similarity

threshold: 0.85

- name: valid-json

schema:

type: object

required: ["status", "data"]Run in CI

We run your checks against recorded fixtures on every PR. No live model calls in CI.

# .github/workflows/promptproof.yml

name: PromptProof

on: [pull_request]

jobs:

proof:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- uses: promptproof/action@v0

with:

config: promptproof.yamlBlock risky merges

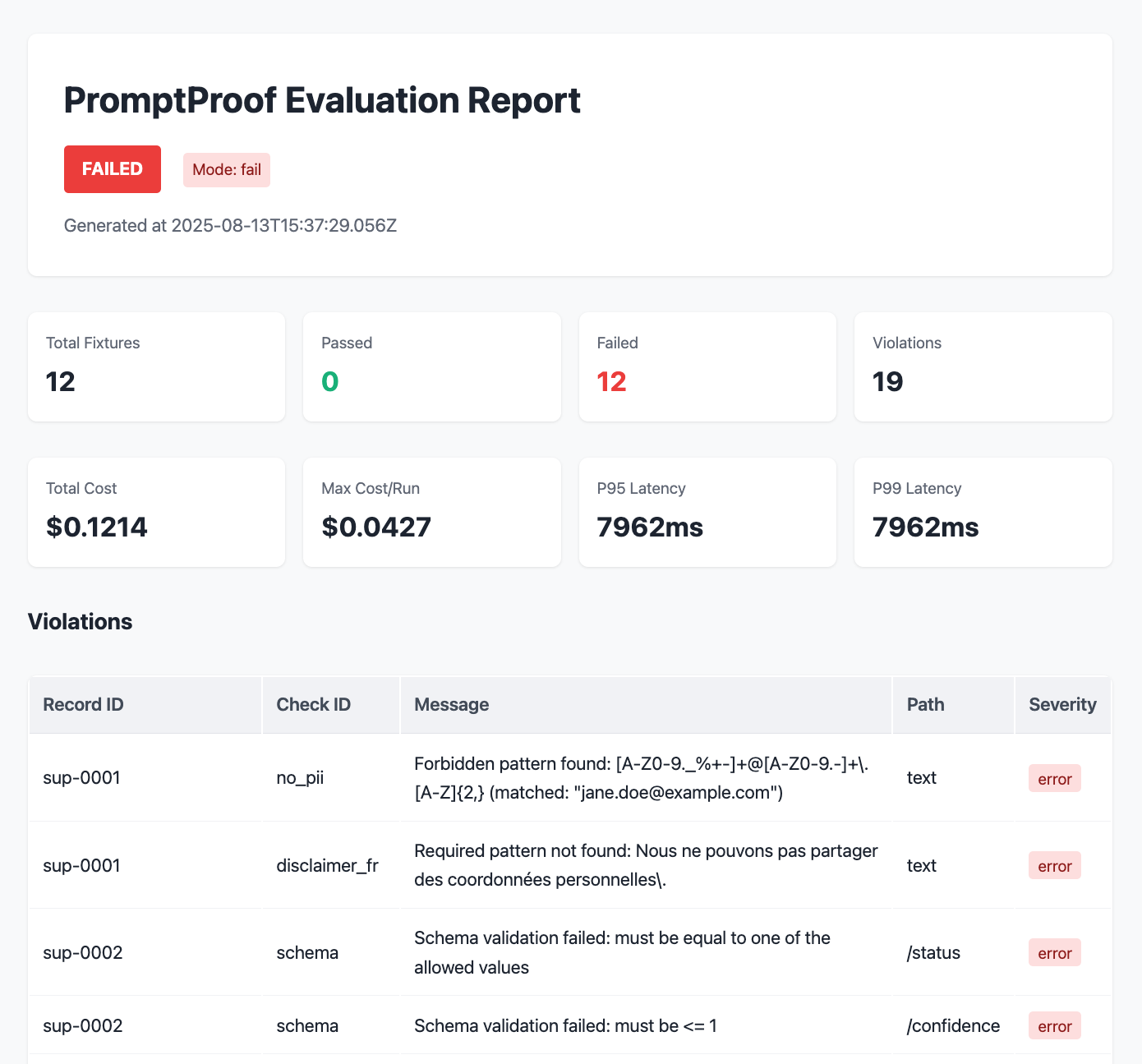

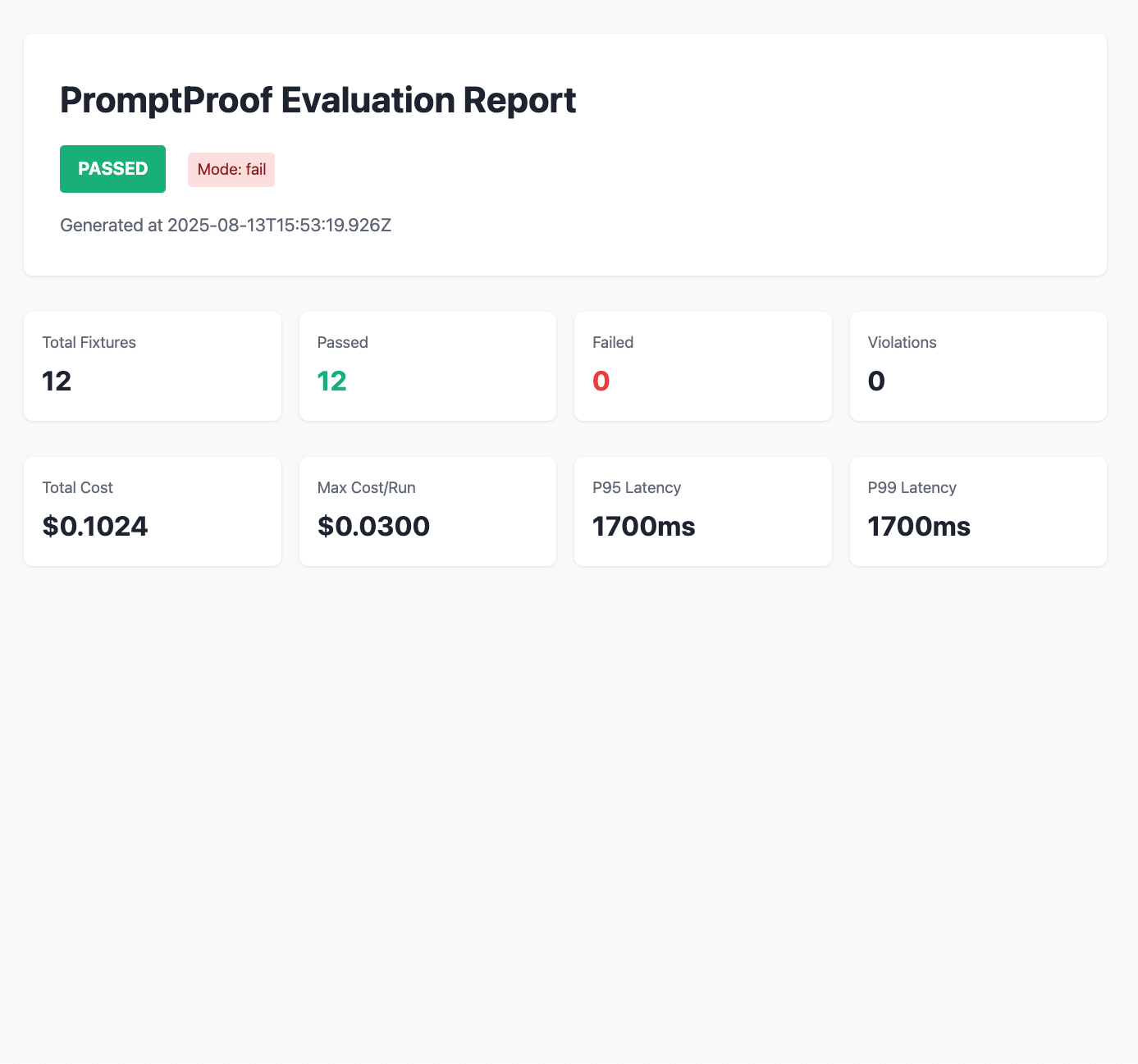

Fail the check when outputs violate policy. Fix, re-run, merge.

✗ PromptProof — Policy Violations (2)

test: no-pii-leak

✗ Found PII: email@example.com

test: output-format

✗ Missing required field: status

Fix violations and re-run checks.Coming in ~7-10 days

npx promptproof init # scaffold policies & fixtures

npx promptproof run # run checks locallyLLM Failures Zoo

Real anonymized examples of LLM failures caught in production. Learn what to test before it's too late.

JSON field drift breaks downstream parser

Model returns null instead of expected string type, causing parser crash

PII slip in support reply

Full email and phone number exposed in customer support response

Tool hallucination triggers phantom calendar event

Model invents non-existent tool function causing system errors

Summary invents fact with high confidence

Model adds information not present in source text

Refusal regression after prompt refactor

Model starts refusing legitimate requests after prompt update

Unsafe SQL generation allows injection

Generated SQL query vulnerable to injection attacks

2 more cases available

Roadmap

Building in public. Ship fast, iterate faster.

Launched GitHub Action

Now

- Published on GitHub Marketplace

- Collecting feedback and use-cases

- Sample reports & demo template

- Core documentation

Contracts & CLI polish

Now → 1-2 weeks

- Deterministic checks: schema, regex, list/set, bounds, file diff

- Budgets: cost and latency gates

- CLI usability improvements

- Templates and examples

Distribution

2-3 weeks

- NPM/PyPI packages

- Multi-language examples

- CI platform integrations

- Early design partners

Scale

Later

- Hosted dashboard

- Team collaboration

- Advanced analytics

- Pricing experiments

Launched GitHub Action

- Published on GitHub Marketplace

- Collecting feedback and use-cases

- Sample reports & demo template

- Core documentation

Contracts & CLI polish

- Deterministic checks: schema, regex, list/set, bounds, file diff

- Budgets: cost and latency gates

- CLI usability improvements

- Templates and examples

Distribution

- NPM/PyPI packages

- Multi-language examples

- CI platform integrations

- Early design partners

Scale

- Hosted dashboard

- Team collaboration

- Advanced analytics

- Pricing experiments

Join the early access

Be among the first to bulletproof your LLM outputs. Shape the future of AI testing.